Auditing design system health with time and a spreadsheet

“The best tool available today for exploring real-life questions of quantity and change is the spreadsheet.” — Bret Victor, “Kill Math”

“The best tool available today for exploring real-life questions of quantity and change is the spreadsheet.” — Bret Victor, “Kill Math”

There are many reasons and ways to audit a design system.

The most frequent is probably auditing the suite of products you have and looking for uses of various component types (buttons, dropdowns, checkboxes) to work out where the biggest component needs in your organization are so that you can prioritize accordingly.

Another is taking an existing design system, and checking it for quality, consistency, and general system health. This is the type I’ll focus on today.

Not everyone is fortunate enough to work in companies with dedicated design system teams. Often you’re just bootstrapping something that solves some of your problems until a critical mass is reached and you can secure some internal investment.

You may not have well-honed metrics or analytics for measuring the health of your system yet. You’ll probably be relying on internal surveys, and good spreadsheet or two.

Story time

It was late 2019, and version 1 of Onfido’s design system had been in place for around a year. The system’s initial creation was a mix of learnings from high-profile examples, best practices, articles from the time, and a healthy dose of ‘let’s just try it’. Over that year, we’d learned more about what was working for us as a company, and what wasn’t. We had a sense of the areas we’d lacked rigor on, because of time or other constraints, and had passionate people in place ready to take the next step and build a more usable, flexible, and mature version of the system, which we would end up calling Castor.

We had a strong vision for Castor, but it required investment, and that required us to take a more rigorous and structured approach. One that we could use to start thinking about metrics, priorities, effort, and impact, and build a solid case.

We then put together a list of objectives for the first phase of the project:

Objective 1: Create a complete inventory list of components

Objective 2: Gap analysis between current and future/desired state

Objective 3: Shortlist of the most impactful components to start with

More tasks than objectives strictly speaking, but hey, we’ve all grown a lot in three years.

There were no existing metrics in place for V1 of the system, so we had to start from scratch with the resources we did have: A few designers (part-time), and a spreadsheet.

What “good” looks like

We knew that in order to show where we wanted to take the system, we needed to define what “good” was, and why V1 wasn’t up to scratch. A small group of designers and engineers collaborated to come up with a series of checklists that encompassed the capabilities and attributes that we believed constituted high-quality components.

Note: These lists are presented as they were when we came up with them at the time. These lists have evolved, and are different now for us internally. I suggest using the items below to spark discussion within your team, but creating your own bars for quality that reflect your team’s values.

Design

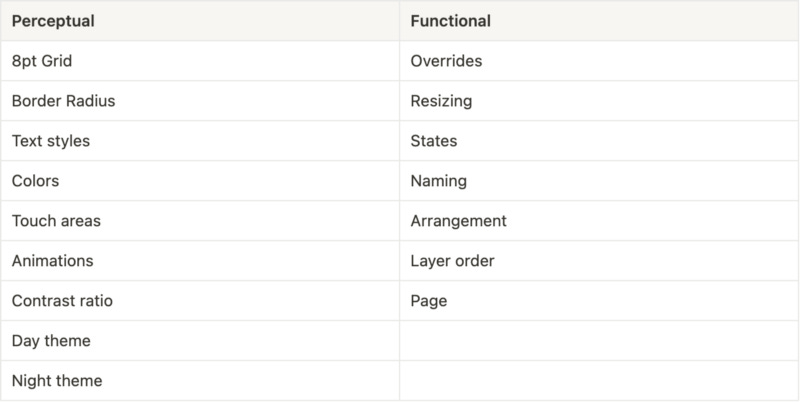

After a lot of discussion, we settled on a bunch of checkbox items grouped into (the fairly arbitrary) Perceptual and Functional aspects. These basically described things that were visible to the end user, and things that affected the designers using them.

Engineering

Engineering did a similar exercise and agreed on the following quality metrics:

Documentation

Documentation was a little lighter to start, but we agreed on a bare minimum.

Conducting the audit

Once we agreed on the areas above, we set up a spreadsheet and agreed we would evaluate each aspect qualitatively, and mark each component against each quality criterion as either a Pass, Fail, Partial, or N/A.

From here, we put together some quick equations to get a (very coarse and lossy) % score for overall system health (split between Design and Code areas) that we could set targets against.

We counted all the cells that were not N/A, and created a % based on how many of the remaining cells were marked as passed. We didn’t include Partial, but if you wanted to, you could include them at a lower weight (half value or something). For us, partial accessibility for example is still not necessarily accessible.

Targets and insights

V1 came out around 58% for Design and 32% for Code. Definitely not good enough.

The audit gave us some initial learnings that we took into the next phase:

We needed a more robust way of handling light/dark mode, as dark mode support was non-existent.

Our touch-area sizes needed improvement across all components.

There were huge gaps in accessible contrast levels.

Animations were inconsistently implemented.

The gap between design and code quality was way too high.

Outcomes

We got a few different benefits out of conducting this audit:

It gave us a framework to set targets and measure improvement against as we proceeded with the work. We set a target of 75% for both code and design and proceeded to exceed those in later quarters.

It solidified assumptions we had about the health of various parts of the system with evidence that we could more easily cite in documents to stakeholders.

The process of discussing quality bars across the design and engineering teams helped us understand each other, our constraints, processes, and build a more collaborative way of working.

The process helped inform which metrics to focus on improving further, both to increase precision and reduce effort.

I’d recommend a similar exercise to anyone wanting to try and push their design system health forward in environments where you’re resource-constrained (and let’s face it, that’s most of us) and where you lack more sophisticated metrics tools.

Thanks for reading. If you enjoyed this article, I also wrote recently about the next stage in our metrics journey, tracking design system adoption.

Thanks to Rebecca Reynolds, Anand Nagrick, and Mark Opland for helping make this story better. Originally posted on Medium.